The ABC’s of measuring the user experience of your product or service

A comprehensive list of different UX measurements and usability questionnaires with helpful tips on which ones to use to meet your research goals and how to implement them.

User research helps all members of a product team to determine whether their concepts, designs, or products are working as they’re intended to or if they’re meeting specific goals. A balance exists in user research between quantitative and qualitative data.

A research yin and yang.

Quantitative data often shows ‘what’ is wrong in a product or service, and qualitative data provides the underlying ‘why’ a problem exists.

This balance seems simple in theory but doesn’t always exist in practice. All-encompassing user research, combining both quantitative and qualitative methods, takes time and resources.

According to a report from Nielsen Norman Group (NNGroup), only 27 percent of all UX professionals surveyed incorporate quantitative research at least one time in their projects. Additionally, only 24% of respondents reported they use both quantitative and qualitative data to evaluate the success of a design.

This isn’t balanced.

Nevertheless, the most distressing information NNGroup gathered from this study is that 18 percent of all UX professionals surveyed answered, “We don’t really know,” when asked how they measure the success of a design.

Teams should never be left wondering how to measure the success (or failure) of their product experience. Yet, as I began searching for different quantitative methods to measure user experience, I was inundated with different questionnaires, research studies, and commentaries on the different metrics. It was hard to determine what tool would be helpful in meeting my team’s research goal needs.

I wanted a comprehensive list of tools and methods to help me incorporate more quantitative research into my workflow while providing me with helpful tips about each tool and method to easily determine which one will be the most effective in meeting my specific research needs.

If you build it, they will come.

I couldn’t find one. So, I built one.

There is a variety of quantitative research methods in this list that all help teams measure or benchmark the user experience of their product or service.

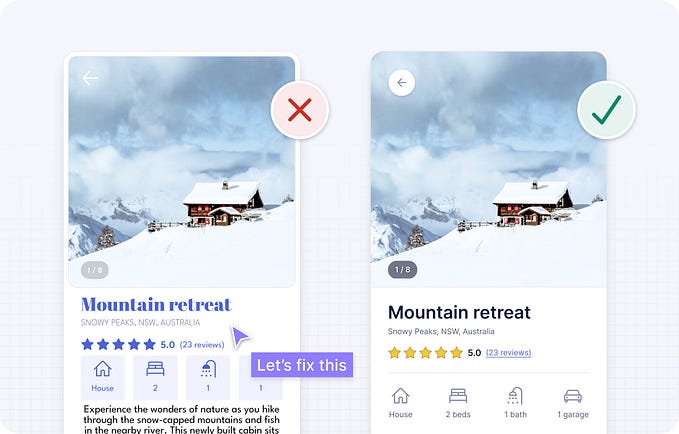

To encourage implementing these methods into your workflow, I designed them on 5" X 7" cards. Each card has information about the method, tips on when to use and when to not use it, and steps on how to use.

Read them. Use them. But, don’t abuse them. Research is all about balance.

SUPR-Q: Standardized User Experience Percentile Rank Questionnaire

The SUPR-Q was developed and validated through psychometric qualification, and is used for measuring the essential aspects of a website in just 8 multiple choice questions. You can compare your SUPR-Q score to other similar websites using the normalized database created and maintained for the SUPR-Q.

Quick Facts:

# of items: 8 item questionnaire

Used for Testing: Usability, Appearance, Trust, Loyalty

Measurement: 5 point option scale (Strongly Disagree(1) — Strongly Agree(5)) for 7 of the questions, and includes the NPS as its last question using an 11 point scale.

Scoring: If you use the SUPR-Q calculator, the scoring is done for you. For scoring by-hand, half the score for the ‘likely to recommend’ question and then average all eight scores. This is your SUPR-Q score.

Reference: SUPR-Q: A Comprehensive Measure of the Quality of the Website User Experience

Why?

- Use the SUPR-Q if your goal is to compare or benchmark your site against your competitor’s or other websites to see how your site’s usability, appearance, trust, and loyalty compare.

- With only 8 multiple choice items, the test is relatively short and easy to understand, possible leading to higher completion rates.

- The final question of the SUPR-Q is the Net Promoter Score. Using the SUPR-Q kills two birds with one stone if you’re also interested in testing customer or user loyalty.

What’s the catch?

- Majority of the value of using SUPR-Q lies in the normalized database you can use to compare your scores against, and the database isn’t free. A one-year license costs between $3,000 — $5,000.

How to do it

- Have users of a website answer the 8 SUPR-Q items. This can be done after all tasks have been completed in a usability study, or as an online intercept survey on your website.

- Once you’ve collected the responses from the SUPRR-Q, paste the results into the SUPR-Q calculator. The calculator automatically generates percentile ranks for a global score and each of the four sub-scales. The calculator can be accessed with a SUPR-Q license.

- If you have the full SUPR-Q license, you can compare your results with other similar websites to see where your score stands against your competitors.

SUS: System Usability Scale

The SUS is the ‘Quick and Dirty’ usability scale. It’s the industry standard when it comes to evaluating a products usability, and has been referenced in over 1300 articles and publications. Chances are, if you’ve heard of benchmarking, you’ve heard of the System Usability Scale.

Quick Facts:

# of items: 10 item questionnaire

Used for Testing: Usability, Learnability

Measurement: 5 point option scale (Strongly Disagree(1) — Strongly Agree(5)).

Scoring: Scores range from 0–100 in 2.5 point increments. According to a study by MeasuringU, an average SUS score is around a 68.

Reference: SUS: A ‘quick and dirty’ usability scale

Why?

- The SUS is one of the simplest, and easy to administer evaluation questionnaires available. You don’t have to be a qualified UX researcher or designer to implement a SUS on your website.

- You don’t need to recruit a very large sample size to still get reliable, valid results from your SUS evaluation.

What’s the catch?

- The scoring system for a SUS score is more complex than other evaluation questionnaires.

- SUS scores are out of 100, but they aren’t a percentage. You can use this score to compare against other scores, but it isn’t the percentage of usability you’ve met.

How to do it

- Determine what format you are administering the SUS, online as a survey or as a pen and paper assessment. Typically, this assessment happens after users have interacted with your website.

- Once you’ve collected the responses from each participant, you calculate your score. For odd SUS items, subtract one from the user response. For even SUS items, subtract the user response from 5. Add up the converted responses for each user and multiply that total by 2.5. This converts the range of possible values from 0 to 100 instead of from 0 to 40.

- You can use this score to compare against other site’s SUS scores. The SUS can be used in an A/B testing format by having two different groups of users interact with two different designs and use a SUS to evaluate their experience. The results from this type of test can be used to determine which design to implement.

NPS: Net Promoter Score

NPS was created in 2003 as a means to help executives find a better way to know how customers felt about a company, product, or service. The scores are measured with a single question survey and reported with a number from 0–100, a higher score is desirable.

Quick Facts:

# of items: 1 item questionnaire

Used for Testing: Customer Loyalty

Measurement: 11 point option scale (Not at all Likely(0) — Extremely Likely(10)).

Scoring: Index ranging from -100 to 100. Customers are classified into 3 different categories: Detractors, Passives, and Promoters depending on their overall score.

Reference: A Holistic Examination of Net Promoter

Why?

- The NPS is one simple question that majority of users can understand. You’re not asking a lot of your users to answer it, possibly leading to higher completion rates than other questionnaires.

- Using NPS is helpful when your team wants to set both internal performance benchmarks and external competitor benchmarks.

What’s the catch?

- NPS doesn’t use the mean of all of the scores. This technique doesn’t allow for small improvements to better a service to be noticed.

- The eleven point scale is large, there isn’t a clear difference to a participant about the difference between a 7 or an 8.

- NPS asks users to predict their behavior by asking “How likely are you to…” rather than respond to previous behavior. No one person is 100% accurate on their future behavior.

How to do it

- Determine the important touchpoints that are important to your product or service, and will be good events to test with an NPS to gauge your customers emotions.

- Once you’ve received majority of responses for your NPS, gather all of your response data into one location, a spreadsheet is recommended.

- Go through all of the scores and identify respondents as Detractors, Passives, or Promoters. Calculate out of every response the number of each Detractor, Passive, or Promoter.

- Calculate the percentage total for each group by dividing the total number of each group by the total number of responses.

- Subtract the percentage total of Detractors from the percentage total of Promoters, and this is you NPS score.

SEQ: Single Ease Question

The SEQ is a one question questionnaire administered immediately after a task to gauge a participant’s attitude toward the task they just attempted. This post-task questionnaire is reliable and efficient, using very little of a user’s time and attention to complete.

Quick Facts:

# of items: 1 item questionnaire

Used for Testing: Task Difficulty

Measurement: 7 point option scale (Very Difficulty(1) — Very Easy(7)).

Scoring: Average of the SEQ scores for each specific task across users, ranging from 0–7.

Reference: Comparison of Three One-Question, Post-Task Usability Questionnaires

Why?

- As a post-task questionnaire, the SEQ gathers ‘Top of Mind’ data from the user since it is implemented immediately after every task in a usability test concludes.

- Very short, simple question that can be administered verbally, digitally, or on paper, allowing it to easily fit into the flow of a usability test without taking up much time or distracting a user.

What’s the catch?

- The seven point scale is large, there isn’t a clear difference to a participant about the difference between a 6 or a 7.

- Users have been known to lie about the difficulty of the specific task they just attempted, even if you watched them fail.

- The SEQ doesn’t measure overall usability or satisfaction with a product like many other questionnaires, and the scores are really only used to compare difficulty across your product or website.

How to do it

- The SEQ is used after a task. In order to use the SEQ you need to plan a usability test and write out each of your tasks.

- After each task is completed in your usability test, prompt the user with the SEQ, and have the user record their rating.

- You can average the scores for each task and compare them against the other tasks, or you can compare tasks scores across respondent user archetypes.

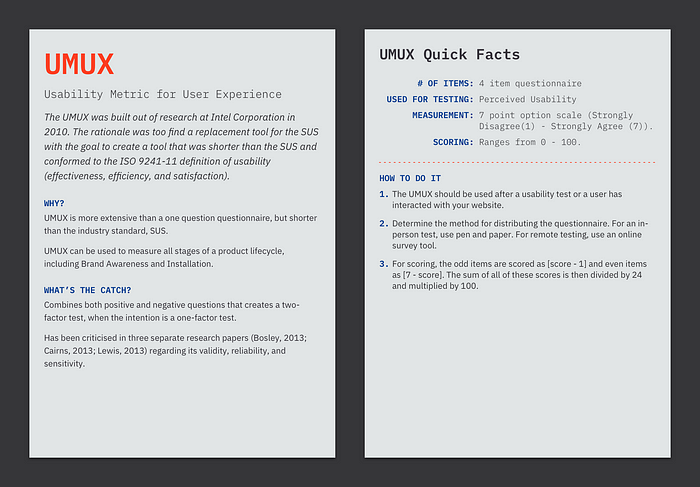

UMUX: Usability Metric for User Experience

The UMUX was built out of research at Intel Corporation in 2010. The rationale was too find a replacement tool for the SUS with the goal to create a tool that was shorter than the SUS and conformed to the ISO 9241–11 definition of usability (effectiveness, efficiency, and satisfaction).

Quick Facts:

# of items: 4 item questionnaire

Used for Testing: Perceived Usability

Measurement: 7 point option scale (Strongly Disagree(1) — Strongly Agree (7)).

Scoring: Ranges from 0–100.

Reference: The Usability Metric for User Experience

Why?

- UMUX is more extensive than a one question questionnaire, but shorter than the industry standard, SUS.

- UMUX can be used to measure all stages of a product lifecycle, including Brand Awareness and Installation.

What’s the catch?

- Combines both positive and negative questions that creates a two-factor test, when the intention is a one-factor test.

- Has been criticized in three separate research papers (Bosley, 2013; Cairns, 2013; Lewis, 2013) regarding its validity, reliability, and sensitivity.

How to do it

- The UMUX should be used after a usability test or a user has interacted with your website.

- Determine the method for distributing the questionnaire. For an in-person test, use pen and paper. For remote testing, use an online survey tool.

- For scoring, the odd items are scored as [score — 1] and even items as [7 — score]. The sum of all of these scores is then divided by 24 and multiplied by 100.

UMUX-LITE: Usability Metric for User Experience Lite

UMUX-LITE is a two item questionnaire based resolving some of the criticisms with UMUX. It includes only the two positive items from the UMUX, but is just as reliable and valid as the UMUX.

Quick Facts:

# of items: 2 item questionnaire

Used for Testing: Perceived Usability

Measurement: 7 point option scale (Strongly Disagree(1) — Strongly Agree (7)).

Scoring: Ranges from 0–100.

Reference: UMUX-LITE: when there’s no time for the SUS

Why?

- UMUX-LITE is 80 percent shorter than the SUS (10 item questionnaire) and 50 percent shorter than the UMUX (4 item questionnaire).

- Fixes the main criticisms with the UMUX by using only the positive items from the UMUX and being a true unidimensional assessment. This reduces confusion and aligns closer to the SUS.

What’s the catch?

- UMUX and UMUX-LITE are newer questionnaires with less published research on the validity, sensitivity, and reliability of the questionnaire than similar questionnaires (SUS, NASA-TLX).

- Calculating the score of the UMUX-LITE requires a regression equation, adding a layer of complication when calculating a score.

How to do it

- The UMUX-LITE should be used after a usability test or a user has interacted with your website.

- Determine the method for distributing the questionnaire. For an in-person test, use pen and paper. For remote testing, use an online survey tool.

- For basic UMUX-LITE scoring, subtract one from the score of each questionnaire item. Add up both of these adjusted scores and divide their sum by 12. Multiply this number by 100.

- To compare your UMUX-LITE score to a SUS score, you need to convert your UMUM-LITE score using a regression equation.

UMUX-LITE = 0.65(([Item 1 Score] + [Item 2 Score] — 2)100/12 +22.9

WAMMI: Website Analysis and Measurement Inventory

WAMMI is based around a standardized 20 item questionnaire and uniques international database, and measures user-satisfaction by asking visitors to your website to compare their expectations with what they are actually experiencing.

Quick Facts:

# of items: 20 item questionnaire

Used for Testing: Attractiveness, Controllability, Efficiency, Helpfulness, Learnability

Measurement: 5 option scale (Strongly Agree — Strongly Disagree).

Scoring: Scores are given for the different scales of the test, and an overall Global Usability Score, an average of the five usability sub-scales. WAMMI scores are expressed as percentile, 50 as the average.

Reference: Official Wammi Informational Site

Why?

- WAMMI can be used to benchmark very complex websites, such as Intranets and Corporate Portals.

- Visitor satisfaction for your site can be compared with values from the WAMMI reference databases that contain data from over 320 surveys.

What’s the catch?

- For large, popular websites, it typically takes two weeks to get a reasonable sample of results from both frequent and less frequent users of your site. For smaller websites, it requires much longer than two weeks.

- 20 questions is a long questionnaire to get users to voluntarily respond to completely and honestly.

How to do it

- Contact the WAMMI team about setting up WAMMI on your site.

- The team will help you configure your WAMMI evaluation based on your specification. Your configuration is placed on their servers, and they will provide you with a URL address to the questionnaire.

- Once you’re ready to start collecting data, you place a link or button on your website, preferably in a central location to garner a large number of respondents.

- When there is a sufficient number of responses, the evaluation is automatically terminated. You will receive an electronic report within two working days from the WAMMI team.

PSSUQ: Post Study System Usability Questionnaire

PSSUQ was developed at the IBM Design Center in 1992 specifically for scenario-based usability studies. The questionnaire contains 16 different statements describing the system, and users either agree or disagree with each statement using a 7 point scale.

Quick Facts:

# of items: 19 item questionnaire

Used for Testing: System Usefulness, Information Quality, Interface Quality

Measurement: 7 option scale (Strongly Disagree(1) — Strongly Agree(7)).

Scoring: PSSUQ has four different scores: OVERALL (Average of questions 1–19), SYSUSE (Average of questions 1–8), INFOQUAL (Average of questions 9–15), INTERQUAL (Average of questions 16–18).

Reference: Psychometric evaluation of the post-study system usability questionnaire: The PSSUQ

Why?

- PSSUQ is very similar to the SUS, but it’s questions are a little more targeted and are designed specifically for a scenario-based type of usability test.

- PSSUQ provides subscores for the different subscales (System Usefulness, Information Quality, Interface Quality) providing a more detailed report on how users are assessing these factors of your site.

What’s the catch?

- The PSSUQ is a long test (19 scale questions) that includes open answered ‘comment’ inputs. This length may lead to lower response rates or respondents not taking their time to provide quality responses to each of the questions.

How to do it

- Like the SUS, PSSUQ is most commonly used and administered after a usability test or users have interacted with your website.

- The test is easy to set up (questions are pre-written), and can be administered through paper and pencil or a digital survey.

- Once all PSSUQ questionnaires have been administered, you can organize all of the data into one location, preferably a spreadsheet.

- The PSSUQ includes one global score, the average score of all the questions, and three separate scores for each of the different subscales: SYSUSE, INFOQUAL, and INTERQUAL.

- SYSUSE (System Usefulness) is the average of questions 1–8. INFOQUAL (Information Quality) is the average of questions 9–15. INTERQUAL (Information Quality) is the average of questions 16–18.

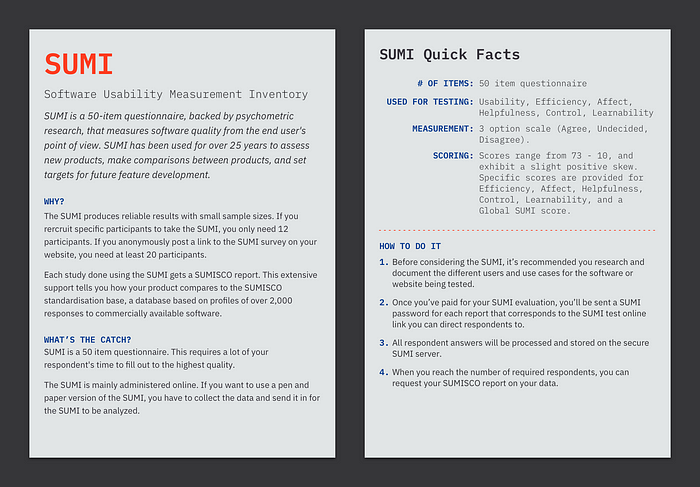

SUMI: Software Usability Metric

SUMI is a 50-item questionnaire, backed by psychometric research, that measures software quality from the end user’s point of view. SUMI has been used for over 25 years to assess new products, make comparisons between products, and set targets for future feature development.

Quick Facts:

# of items: 50 item questionnaire

Used for Testing: Usability, Efficiency, Affect, Helpfulness, Control, Learnability

Measurement: 3 option scale (Agree, Undecided, Disagree).

Scoring: Scores range from 73–10, and exhibit a slight positive skew. Specific scores are provided for Efficiency, Affect, Helpfulness, Control, Learnability, and a Global SUMI score.

Reference: SUMI: the Software Usability Measurement Inventory

Why?

- The SUMI produces reliable results with small sample sizes. If you rercruit specific participants to take the SUMI, you only need 12 participants. If you anonymously post a link to the SUMI survey on your website, you need at least 20 participants.

- Each study done using the SUMI gets a SUMISCO report. This extensive support tells you how your product compares to the SUMISCO standardisation base, a database based on profiles of over 2,000 responses to commercially available software.

What’s the catch?

- SUMI is a 50 item questionnaire. This requires a lot of your respondent’s time to fill out to the highest quality.

- The SUMI is mainly administered online. If you want to use a pen and paper version of the SUMI, you have to collect the data and send it in for the SUMI to be analyzed.

How to do it

- Before considering the SUMI, it’s recommended you research and document the different users and use cases for the software or website being tested.

- Once you’ve paid for your SUMI evaluation, you’ll be sent a SUMI password for each report that corresponds to the SUMI test online link you can direct respondents to.

- All respondent answers will be processed and stored on the secure SUMI server.

- When you reach the number of required respondents, you can request your SUMISCO report on your data.

QUIS: Questionnaire for User Satisfaction

The QUIS is used to get a users’ opinions regarding the usability of an interface and to evaluate user acceptance of an interface. The QUIS is now at version 7.0 with demographic information, a measure of overall system satisfaction along 6 scales, and measures of 9 specific interface factors.

Quick Facts:

# of items: 27 item questionnaire

Used for Testing: Interaction Satisfaction, Terminology, System Feedback, Learning Factors, System Capabilities

Measurement: 9 point scale with unique antonym pairs at each end of the scale (i.e. Terrible(0) — Wonderful(9)) Each question also has a N/A option.

Scoring: Scores are the average of each question ranging from 0–9.

Reference: QUIS Informational Site

Why?

- The QUIS has been shown to be effective with smaller sample sizes (<10 respondents).

- The QUIS is helpful is you need to gather information and scores on specific subsets of your interface: Interaction Satisfaction, Terminology, System Feedback, Learning Factors, System Capabilities.

What’s the catch?

- The QUIS is limited to use on products with a digital interface.

- Uses a 9 item range which adds a lot of noise tp the rating scale. The difference between a 6 and a 7 isn’t obvious to a respondent.

How to do it

- Identify your target user sample. This sample should be representative of the typical users of your website, but shouldn’t be ‘everyone uses our website.’

- The QUIS is commonly after a usability test. Make sure each of the tasks in the usability test are workflows you want to test.

- Once your users have finished all tasks in the test, administer the QUIS, and answer any questions they may have about the QUIS.

- You can begin scoring once a user has completed the questionnaire. An overall QUIS score can be calculated by averaging over all of the questions.

PURE: Practical Usability Rating by Experts

PURE is a form of expert review that identifies usability problems by generating task usability rankings and comparing them to metrics collected from an independently run usability test on three software products.

Quick Facts:

# of items: 5–10 different tasks that your target audience must be able to accomplish for success.

Used for Testing: Appeal, Usability, Usefulness

Measurement: Rating from 1 (Easy) to 3 (Very Hard) is given to each step in one of the predetermined fundamental tasks for the assessment.

Scoring: Scores are assessed at the task level and rated from (1–3). Scores are given a corresponding color: 1=green, 2=orange, 3=red. The total usability score is the sum of each task score.

Reference: Practical Usability Rating by Experts (PURE): A Pragmatic Approach for Scoring Product Usability

Why?

- PURE doesn’t require recruiting users to take a typical usability questionnaire. It’s cheap, quick, and only requires three different usability researchers or evaluators trained on the PURE method.

- Teams can save a PURE scorecard of versions of a product or website to see if the changes they are making are having an overall impact on the usability of their product or website.

- PURE can be used to evaluate prototypes of all fidelity levels, including click-through InVision prototypes or user flows.

What’s the catch?

- A successful PURE study requires the team behind the product being tested to agree on a target user type and specific tasks for this target user type to complete. Consensus can sometimes be hard to form, especially when some stakeholders believe ‘all users’ are their target user type.

How to do it

- Identify your target user type, select the 5–10 fundamental tasks, indicated ‘happy paths’ or desired paths for the task, and set boundaries for each task, labeled in the PURE scoresheet.

- Collect PURE ratings from each of the evaluators as they go through the different tasks, and calculate the interrator reliability for the raters’ independent scores.

- Let the evaluators discuss their ratings and come to consensus on a single score for each step.

- Sum the PURE scores for each task and the entire product. Attribute each product, step, and task with the appropriate color.

NASA-TLX: NASA Task Load Index

The NASA Task Load Index is a multidimensional assessment toll that rates perceived workload in order to assess a task, system, or team’s effectiveness or other aspects of overall system or team performance.

Quick Facts:

# of items: 21 item questionnaire, 15 paring questions and 6 scales.

Used for Testing: Mental Demand, Physical Demand, Temporal Demand, Performance, Effort, Frustration

Measurement: Antonym pairs at each end (Low, Poor) 1–20 (High, Good).

Scoring: There are three different scores: raw rating, adjusted rating, and the overall workload scale.

Reference: NASA-TLX @ AMES

Why?

- Developed by the Human Performance Group at NASA’s Ames Research Center over a three-year development cycle that included over 40 laboratory simulations, and has been cited in oVer 4,400 different studies.

- The NASA-TLX reliable in a variety of domains, including aviation, healthcare, and other complex, high-consequence industries.

What’s the catch?

- The NASA-TLX is laborious to set-up and complex to score and conduct subscale weighting.

- As a post-task questionnaire, it must be administered immediately after every key task in a test. This is time intensive and intrusive during a usability test.

How to do it

- Set up a usability test with predetermined target user types and specific tasks for the target user types to accomplish.

- During the test, once a user has completed a task, administer the questionnaire. It should be administered after every task. The weighting (pairing) procedure should be administered first, immediately followed by the scale questions.

- You can use the NASA-TLX software or app to do the scoring calculations for you, or you can do the scoring by hand. For scoring, every time a user selects a factor during the pairing questions, that factor gets a tally. Sum up all of the tally’s to calculate the weight for each factor. The raw rating score is the users scale rating. The adjusted rating is the users scale rating * the sources-of-workload weight. The overall weighted workload scale is the sum of all adjusted ratings divided by 15.

UEQ: User Experience Questionnaire

A quick and reliable questionnaire to measure the User Experience of interactive products. The UEQ includes both classical usability measurements and user experience aspects.

Quick Facts:

# of items: 26 item questionnaire.

Used for Testing: Attractiveness, Perspicuity, Efficiency, Dependability, Stimulation, Novelty

Measurement: 7 point rating scale with contrasting attributes at each end of the scale.

Scoring: Each item is rated from -3 to +3. -3 is the most negative answer, 0 is neutral, and +3 is the most positive answer. An overall average is calculated for each of the different scales.

Reference: Applying the User Experience Questionnaire (UEQ) in Different Evaluation Scenarios

Why?

- The UEQ measures a mix of both classical usability aspects(efficiency, perspicuity, and dependability), user experience aspects(originality, stimulation), and attractiveness.

- The team behind the UEQ provides a free data analysis tool to help establish an accurate sample size, track scores, calculate scores, and produce reports.

- An additional KPI Calculation worksheet is available to calculate a KPI for your product based on the six different scales. A metric to make managers extremely happy.

What’s the catch?

- The UEQ provides a lot of valuable data and analysis on measuring the usability and user experience of a site, but it still doesn’t tell you the ‘why.’ This test should be paired with a usability test.

- You need a large sample size (more than 100 participants) to return the most reliable and valid results.

How to do it

- Set up a usability test with predetermined target user types and specific tasks for the target user types to accomplish.

- The UEQ should be administered immediately after the user has finished, or communicated they have completed all of the tasks.

- Once each user has completed the UEQ, input the data into the excel sheet provided for free from the UEQ’s website. This spreadsheet is built to do the scoring and calculations for you.

- The spreadsheet tool creates benchmark graphs for you to compare the results of your UEQ with your competitors or see how your changes have advanced your product.

Download the cards

- Download the printable cards from this link.

- Cut the cards out on the dotted outline.

- DON’T cut down the middle of the card. Fold on the middle line to create the card.